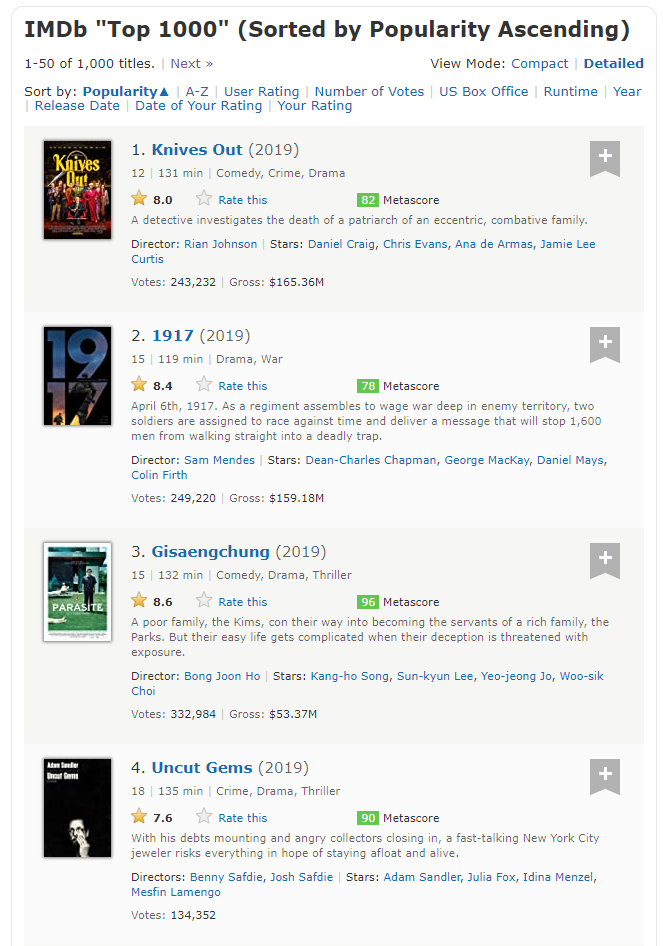

- Crawling 주제 Top 1000 : https://www.imdb.com/search/title/?groups=top_1000

IMDb "Top 1000" (Sorted by Popularity Ascending) - IMDb

IMDb's advanced search allows you to run extremely powerful queries over all people and titles in the database. Find exactly what you're looking for!

www.imdb.com

- import 모듈

import requests

from requests import get

from bs4 import BeautifulSoup

import numpy as np

from fake_useragent import UserAgent

- useragent 생성 및 header 정보 생성

ua = UserAgent(verify_ssl=False)

headers = {'User-Agent': ua.random}

- url 응답 요청

url = https://www.imdb.com/search/title/?groups=top_1000

req = requests.get(url, headers=headers)

- html 파싱

soup = BeautifulSoup(req.text, "html.parser")

- 파싱 데이터 확인

* soup.prettify() : 트리형식으로 출력

print(soup.prettify())

- 수집할 데이터 확인 작업

title : 영화 제목

years : 영화 개봉 년도

time : 영화 상영 시간

imdb_ratings : imdb 평점

metascores : 메타스코어

votes : 영화 투표 점수

us_gross : 미국내 영화 총 매출

- 데이터 변수 리스트 생성

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

- HTML 코드 확인

- 생성

movie_div = soup.find_all('div', class_='lister-item mode-advanced')

- 데이터 추출 준비 사전 작업

두 개를 비교하면 가져와야 될 데이터 부분이 없는 것을 확인 할 수 있음.

그러므로 우리는 모든 데이터를 가져올 수 없으므로 이에 대하여 준비를 해야됨.

누락된 데이터가 있으면 파싱 데이터가 작동하지 않음을 항상 염두해야됨.

- 제목 추출

for container in movie_div:

name = container.h3.a.text

titles.append(name)> Result

['Knives Out', '1917', 'Gisaengchung', 'Uncut Gems', 'Jojo Rabbit', 'Once Upon a Time... in Hollywood', 'Joker', 'Ford v Ferrari', 'Little Women', 'The

Shawshank Redemption', 'The Irishman', 'Avengers: Endgame', 'The Gentlemen', 'Toy Story 4', '28 Days Later...',

'Daeboo', 'The Lighthouse', 'Blade Runner 2049', 'The Dark Knight', "Harry Potter and the Sorcerer's Stone",

'Inception', 'Guardians of the Galaxy', 'Marriage Story', 'Goodfellas', 'Interstellar', 'The Shining', 'Twelve Monkeys',

'Fight Club', 'The Lord of the Rings: The Fellowship of the Ring', 'Pulp Fiction', 'The Wolf of Wall Street',

'Thor: Ragnarok', 'Sen to Chihiro no kamikakushi', 'Inglourious Basterds', 'Green Book', 'Titanic', 'Forrest Gump',

'Rapunjel', 'The Matrix', 'Mad Max: Fury Road', 'Call Me by Your Name', 'There Will Be Blood',

'Mission: Impossible - Fallout', 'Avengers: Infinity War', 'Gone Girl', 'Shutter Island', 'The Lion King', 'Prisoners',

'Gladiator', 'Dakeu naiteu raijeu']

- 개봉 년도 추출

for container in movie_div:

year = container.h3.find('span', class_='lister-item-year').text

years.append(year)> Result

['(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(1994)', '(2019)', '(2019)', '(2019)', '(2019)'

, '(2002)', '(1972)', '(I) (2019)', '(2017)', '(2008)', '(2001)', '(2010)', '(2014)', '(2019)', '(1990)', '(2014)', '(1980)', '(1995)',

'(1999)', '(2001)', '(1994)', '(2013)', '(2017)', '(2001)', '(2009)', '(2018)', '(1997)', '(1994)', '(2010)', '(1999)', '(2015)', '(2017)',

'(2007)', '(2018)', '(2018)', '(2014)', '(2010)', '(1994)', '(2013)', '(2000)', '(2012)']

- 상영 시간 추출

for container in movie_div:

runtime = container.find('span', class_='runtime').text

time.append(runtime)> Result

['131 min', '119 min', '132 min', '135 min', '108 min', '161 min', '122 min', '152 min', '135 min', '142 min', '209 min',

'181 min', '113 min', '100 min', '113 min', '175 min', '109 min', '164 min', '152 min', '152 min', '148 min', '121 min',

'137 min', '146 min', '169 min', '146 min', '129 min', '139 min', '178 min', '154 min', '180 min', '130 min', '125 min',

'153 min', '130 min', '194 min', '142 min', '100 min', '136 min', '120 min', '132 min', '158 min', '147 min', '149 min',

'149 min', '138 min', '88 min', '153 min', '155 min', '164 min']

- imdb 평점 추출

for container in movie_div:

imdb = float(container.find('strong').text)

imdb_ratings.append(imdb)> Result

[8.0, 8.4, 8.6, 7.6, 8.0, 7.7, 8.5, 8.1, 8.0, 9.3, 7.9, 8.4, 8.0, 7.8, 7.6, 9.2, 7.7, 8.0, 9.0, 7.6, 8.8, 8.0, 8.0, 8.7, 8.6, 8.4, 8.0, 8.8,

8.8, 8.9, 8.2, 7.9, 8.6, 8.3, 8.2, 7.8, 8.8, 7.7, 8.7, 8.1, 7.9, 8.2, 7.7, 8.5, 8.1, 8.1, 8.5, 8.1, 8.5, 8.4]

- 메타스코어 추출

for container in movie_div:

metascore = container.find('span', class_='metascore').text

if len(metascore) > 1:

metascores.append(metascore.strip())

else:

metascore.append(np.nan)> Result

['82', '78', '96', '90', '58', '83', '59', '81', '91', '80', '94', '78', '51', '84', '73', '100', '83', '81', '84', '64', '74', '76', '93', '90',

'74', 66', '74', '66', '92', '94', '75', '74', '96', '69', '69', '75', '82', '71', '73', '90', '93', '93', '86', '68', '79', '63', '88', '71', '67',

'78']

- 투표 점수 추출

for container in movie_div:

vote = container.find('span', attrs={'name': 'nv'})['data-value']

if len(vote) > 1:

votes.append(vote)

else:

votes.append(np.nan)> Result

['243378', '249378', '333107', '134433', '175187', '418425', '728676', '180025', '80965', '2206090', '264221', '684592',

'65355', '167449', '359873', '1519634', '86850', '419421', '2186071', '598595', '1935107', '1001925', '194103', '960538',

'1387921', '840607', '556357', '1761132', '1578877', '1732917', '1098527', '538627', '596931', '1191303', '302240',

'998230', '1702639', '385422', '1587845', '826098', '182019', '486507', '265865', '755809', '804447', '1060520',

'892216', '558987', '1273173', '1446860']

- 미국 내 총 매출 추출

for container in movie_div:

gross = container.find('span', attrs={'name': 'nv'}).findNext(

'span').findNext('span').findNext('span').text

if len(gross) > 3:

us_gross.append(gross)

else:

us_gross.append(np.nan)> Result

['$165.36M', '$159.18M', '$53.37M', nan, '$0.35M', '$142.50M', '$335.45M', '$117.62M', '$108.05M', '$28.34M', nan,

'$858.37M', nan, '$434.04M', '$45.06M', '$134.97M', '$0.43M', '$92.05M', '$534.86M', '$317.58M', '$292.58M',

'$333.18M', nan, '$46.84M', '$188.02M', '$44.02M', '$57.14M', '$37.03M', '$315.54M', '$107.93M', '$116.90M',

'$315.06M', '$10.06M', '$120.54M', '$85.08M', '$659.33M', '$330.25M', '$200.82M', '$171.48M', '$154.06M',

'$18.10M', '$40.22M', '$220.16M', '$678.82M', '$167.77M', '$128.01M', '$422.78M', '$61.00M', '$187.71M', '$448.14M']

- Tutorial Code 1

import requests

from requests import get

from bs4 import BeautifulSoup

import numpy as np

from fake_useragent import UserAgent

# usaragnt 생성 및 header 정보 생성

ua = UserAgent(verify_ssl=False)

headers = {'User-Agent': ua.random}

# 사이트

url = 'https://www.imdb.com/search/title/?groups=top_1000&ref_=adv_prv'

# url 응답 요청

req = requests.get(url, headers=headers)

# 응답 값 확인

print(req)

# HTML 구문 확인

soup = BeautifulSoup(req.text, "html.parser")

# 확인(soup.prettify() - 트리형식으로 출력)

print(soup.prettify())

# 데이터 추출 리스트 생성

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

movie_div = soup.find_all('div', class_='lister-item mode-advanced')

for container in movie_div:

# 제목 추출

name = container.h3.a.text

titles.append(name)

# 출시 년도 추출

year = container.h3.find('span', class_='lister-item-year').text

years.append(year)

# 상영 시간 추출

runtime = container.find('span', class_='runtime').text

time.append(runtime)

# 평점 추출

imdb = float(container.find('strong').text)

imdb_ratings.append(imdb)

# 메타스코어 추출

metascore = container.find('span', class_='metascore').text

if len(metascore) > 1:

metascores.append(metascore.strip())

else:

metascore.append(np.nan)

# 투표 추출

vote = container.find('span', attrs={'name': 'nv'})['data-value']

if len(vote) > 1:

votes.append(vote)

else:

votes.append(np.nan)

# 총 수입 추출

gross = container.find('span', attrs={'name': 'nv'}).findNext(

'span').findNext('span').findNext('span').text

if len(gross) > 3:

us_gross.append(gross)

else:

us_gross.append(np.nan)

# 추출 데이터 출력

print(titles)

print(years)

print(time)

print(imdb_ratings)

print(metascores)

print(votes)

print(us_gross)'Python_Crawling > Crawling' 카테고리의 다른 글

| [Crawling]imDB(인터넷 영화 데이터 베이스) Tutorial - 3 (0) | 2020.03.29 |

|---|---|

| [Crawling]imDB(인터넷 영화 데이터 베이스) Tutorial - 2 (0) | 2020.03.29 |

| [Naver]네이버 메일 제목 가져오기 - 클립보드 사용 (0) | 2020.03.08 |

| [Selenium]Python Study - PPT Presentation Material - 3 (0) | 2019.12.18 |

| [Selenium]Python Study - PPT Presentation Material - 2 (0) | 2019.12.18 |